As an SEO specialist, I find sitemaps and robots.txt files to be some of the most head-scratching topics under the SEO umbrella. However, when used correctly, they can help to better communicate your website’s overall quality to search engine robots. In this article, I’ll walk you through what you need to know about sitemaps, the difference between XML and HTML sitemaps, robots.txt files, and the SEO benefits of each.

Read straight through, or jump to the section you want to read!

What is an XML sitemap?

What is an HTML sitemap?

What is a robots.txt file?

How to submit your sitemap to Google Search Console

What is an XML sitemap?

A sitemap is essentially a map of your entire website, and there are two types: XML and HTML. Simply put, XML sitemaps help to guide search engine robots, while HTML sitemaps help to guide your website visitors.

Google states that you may need a sitemap if you have a large website, a lot of archived content pages with poor internal linking, a new site with few backlinks, or an image- or video-centric website. Nevertheless, I think all websites can benefit from using an XML sitemap specifically because it is yet another tool to improve communication between your website and search engine robots. As SEO specialists, that’s one of our goals, right?

Within an XML sitemap, you can include a list of your website’s important, SEO relevant URLs to hint to search engine robots that they should crawl these pages. However, just because you include these URLs in your sitemap does not necessarily mean that search engine robots will crawl and index these pages in search engine results pages (SERPs). Even so, using an XML sitemap can help search engine robots crawl your URLs more intelligently, taking the guesswork out of their jobs. Today, Google, Yahoo!, and Microsoft Bing support XML sitemaps; they even jointly sponsor sitemaps.org—sounds similar to what they did for schema.org!

XML Sitemap Tags

You can also remove some of the guesswork by including the following required and optional tags in your XML sitemap: <loc> (location) and <lastmod> (last modification) tags.

<loc> Tag

Use the <loc> tag, the only required tag, to specify the canonical version of the URL location using the correct site protocol. This helps to prevent any duplicate content issues . For example, if your website uses https or http, you should include the correct protocol in the <loc> tag. You should also specify if your website uses www or not. In the example below, the canonical URL includes both the correct protocol (https) and the version of the website (www).

<url>

<loc>https://www.bluefrogdm.com/blog/sitemap-seo-benefits</loc>

<lastmod>2020-15-7</lastmod>

<url>

<lastmod> Tag

Use the <lastmod> tag, an optional but highly recommended tag, to communicate the file’s last modified date to search engine robots. This tag communicates both freshness of content and the original publisher to search engines. While search engines like Google prefer fresh content, don’t try to trick them by constantly updating your <lastmod> tags because it could result in a penalty, which can significantly hurt your website’s visibility in SERPs.

<priority> Tag

An additional, but optional, tag is the <priority> tag. This tag signals to search engines how important you consider your sitemap URLs on a scale of 0.0 (lowest priority) to 1.0 (highest priority). However, Google currently claims that they do not take the <priority> tag into account when reading your sitemap.

In addition to including the <loc> and <lastmod> tags in your XML sitemap, make sure your XML sitemap does not exceed 50 MB (uncompressed) and 50,000 URLs. If you have too large of a file size or more than 50,000 URLs, Google encourages you to use multiple sitemaps. For example, you can organize one sitemap by blog post URLs, a second sitemap by product page URLs, and so on. Then, you can nest multiple sitemaps within a single sitemap index file. Regardless of the route you take, make sure you upload your sitemaps to Google Search Console and include your sitemap URLs in your robots.txt file to help Google and other search engines easily find your sitemaps in one place. More on both of these topics in a bit!

Other Types of XML Sitemaps

In addition to the standard XML sitemap, there are also several other types you can use for particular use cases. The two most common include XML image and XML video sitemaps.

XML Image Sitemap

Similar to the standard XML sitemap, an XML image sitemap helps to specify your most important images for search engine robots. Since most websites embed images directly within a web page’s content, search engines can crawl these images alongside the content. This makes image sitemaps unnecessary for most businesses.

Only use an XML image sitemap if images play an important role in your business; for example, stock image websites who rely on website traffic from Google Image search.

If images do not play a significant role in your business, use the ImageObject schema markup type to call out specific image properties to search engines like an image caption or thumbnail.

XML Video Sitemap

Similar to XML image sitemaps, XML video sitemaps allow you to specify your most important video assets for search engine robots, but only use this type of sitemap if videos play a crucial role in your business.

For businesses where videos do not play an important role, use the VideoObject schema markup to highlight specific video properties for search engines such as the video’s frame size and quality.

SEO Benefits of XML Sitemaps

By including your website’s most important, SEO-relevant URLs in your XML sitemap, you help search engine robots to crawl your URLs more intelligently. This can ultimately influence how search engines view your website’s quality. Ideally, you should exclude the following pages, according to Search Engine Journal:

- Non-canonical pages

- Duplicate pages

- Paginated pages

- Parameter or session ID based URLs

- Site search result pages

- Reply to comment URLs

- Share via email URLs

- URLs created by filtering that are unnecessary for SEO

- Archive pages

- Any redirections (3xx), missing pages (4xx), or server error pages (5xx)

- Pages blocked by robots.txt

- Pages with noindex

- Resource pages accessible by a lead gen form (e.g. case study download)

- Utility pages that are useful to users, but not intended landing pages (e.g. login page, privacy policy, etc.)

Google and other search engines likely view these pages as less relevant to SEO because they don’t represent typical landing pages. If you don’t want your privacy page, for example, to be the first page of a visitor’s journey on your website, then leave it out of your XML sitemap. Use this rule of thumb to help determine which pages to include or exclude from your sitemap.

Remember, just because you leave a URL out of your sitemap doesn’t mean it’s blocked from search engines. Search engines can still crawl these URLs through links to these pages; your XML sitemap just gives them an indication of your more important URLs that deserve crawl budget. It’s also important to note that search engines will not always crawl your entire website during every visit; they follow random paths and, typically, do not backtrack, so they may crawl some of your internal pages and then follow an external link to a completely different website.

All in all, use an XML sitemap to communicate your most important, SEO-relevant URLs to search engine robots. Including less important URLs in your sitemap can dilute search engines’ perception of your website’s overall quality (authority) and waste valuable crawl budget, which can take away indexation opportunities from key URLs.

What is an HTML sitemap?

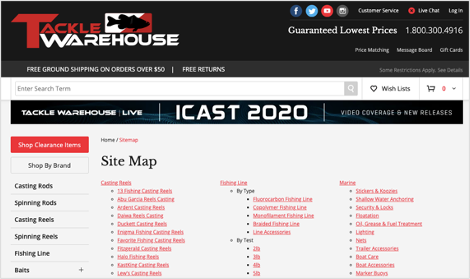

The second type of sitemap, HTML sitemaps, helps guide visitors more so than search engine robots, and they typically provide limited SEO value. HTML sitemaps use anchor links to list all of a website’s URLs by category to help visitors quickly find a specific page. Tackle Warehouse, for example, links to their sitemap in their footer; their sitemap includes links to every product on their website from Paddle Tail Swimbaits to Shimano Spinning Reels.

HTML sitemaps were more common before website navigation menus moved into website headers, but they can still add some SEO value for specific use cases.

SEO Benefits of HTML Sitemaps

Consider using an HTML sitemap if your primary website navigation does not link to all of your website pages. Let’s look at Tackle Warehouse again; an HTML sitemap could provide some SEO benefits because their navigation menu does not link to every page of their website. Instead, their main navigation includes the highest level of product categories with some links to more specific subcategories.

Other instances to use an HTML sitemap include if you have a large section of your site that search engines cannot access, you have important pages that you would normally bury or exclude in the main navigation menu (e.g. support pages), or visitors actually use your sitemap. For the latter, consider moving your popular sitemap links into your navigation menu to further optimize your menu for search engines.

What is a robots.txt file?

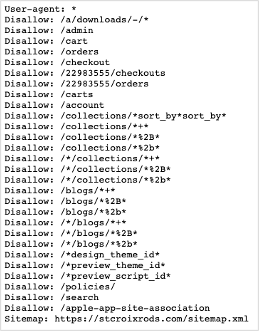

Your robots.txt file is usually the first place search engine robots visit when they access your website, so it’s a good idea to include your XML sitemap(s) here to help search engines more easily discover your most important URLs. This simple text file is placed in your website’s root directory and tells search engine robots which pages they can and can’t crawl. Robotstxt.org specifies two important considerations when using robots.txt:

- Search engine robots can ignore your robots.txt file (e.g. malware robots).

- Anyone can view a domain’s robots.txt file by searching domain.com/robots.txt.

Example of robots.txt file (Source: https://stcroixrods.com/robots.txt)

Example of robots.txt file (Source: https://stcroixrods.com/robots.txt)

The key takeaway here: don’t hide information using the robots.txt file. Even if you disallow search engine robots from crawling specific pages, they can still index these pages in search results if another domain links to one of your disallowed pages and search engine robots follow that link to your page. If you wish to prevent search engine robots from indexing a URL, use a robots noindex tag to tell search engines not to index the URL in SERPs.

SEO Benefits of Robots.txt Files

The robots.txt file gives search engines directions on how they should crawl, or not crawl, a website. Search engine robots will use the information in your robots.txt file to guide the crawl actions they take on your site. Overall, using a robots.txt file can help to communicate how search engines should crawl your website, as well as guide them directly to your XML sitemap, which contains your most important URLs.

Still interested in learning more about robots.txt? Check out Moz’s article on all things robots.txt!

How to Submit Your Sitemap to Google Search Console

To tell Google and other search engines where they can find your website’s sitemap, submit your sitemap through Google Search Console (GSC):

You must have owner permission to submit a sitemap for a property using GSC’s Sitemaps tool. If you don’t have owner permission for a property, you can submit your robots.txt file and reference your sitemap from this file.

From here, make sure your sitemap uses one of Google’s accepted sitemap formats.

- Open GSC and navigate to Sitemaps in the left-hand menu.

- Enter your sitemap URL under Add a new sitemap.

- Click Submit.

After you submit your sitemap, you can review its status (e.g. all URLs were successfully crawled) and monitor any critical errors. Take a look at Google’s Sitemaps report help document to understand how to read the report and the complete list of potential errors.

Key Takeaways

Sitemaps and robots.txt files don’t have to be intimidating. If you can remember the following key takeaways, you’ll be well on your way to improving how your website communicates with search engine robots.

- Use an XML sitemap to communicate your most important, SEO-relevant URLs to search engines.

- Leave less important URLs out of your sitemap because they can dilute search engines’ perception of your website’s authority and waste crawl budget.

- Only use an HTML sitemap in particular use cases.

- Don’t hide information using your robots.txt file.

- Use your robots.txt file to communicate how search engine robots should crawl your website.

- Include your sitemap in your robots.txt file.

- Upload your sitemap to GSC to detect any errors that prevent search engines from crawling your website.

Stay tuned for the next post in our Local SEO Series: Best Practices for 301 Redirects + download the Local SEO Checklist.